Empirical Evaluation

Accuracy and Efficiency Evaluation in Renaming

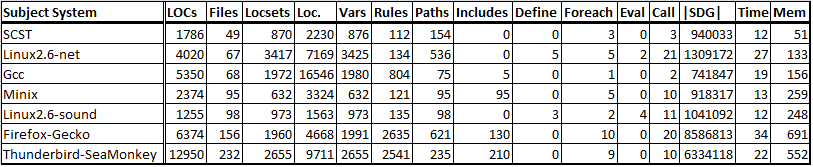

We selected the Makefiles in 7 subject systems from their open-source

repositories as shown in TableI.

Table I: Subject Systems and Build Code Information

We asked six Ph.D. students to independently identify the locsets for consistent

renaming. We built a tool to assist them in that oracle building task. For each

string s, it performs text-searching for all occurrences of s

in a Makefile and included one(s). Human subjects examine those occurrences and

mark those which are variables’ names and require consistent renaming as

belonging to locsets.

The entire process took more than 40 hours. In Table I, columns

Locsets and Loc. display the number of

locsets and the total number of locations in locsets. A locset

can have multiple variables.

Complexity of Makefiles is expressed via the numbers of program elements.

LOCs(Line of Codes of Makefiles), Files (Makefiles), Vars (Variables), Rules

(Number of Rules), Paths (Number of branches with the Makefile), Includes

(Number of included Makefiles), Define (#variables defined using define)

, Foreach (# foreach statements), Eval (#eval statements), Call (#call

statements), |SDG| (The total number of nodes in the corresponding SDGs), Time

(time needed to build SDGs), and Mem (for the size of memory in MB for those

SDGs).

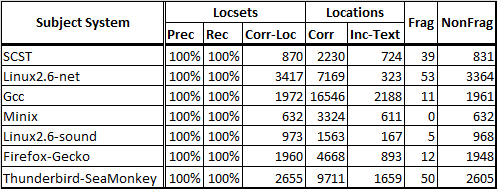

In Table II, Precision (column Prec) is the ratio of the correctly detected

locsets over the total detected ones. Recall (column Rec) is the ratio of the

correctly detected ones over the total number of locsets in the oracle. Column

Inc-Text shows the number of incorrect locations from the text-search tool.

Columns Frag and NonFrag

show the numbers of locsets with fragmented and nonfragmented variables.

Table II: Locset Detection Accuracy Result

Usefulness in Smell Detection and Renaming

Experiment Setting:

We invited 8 Ph.D. students at Iowa State University with 4-8 years of

programming experience who were divided into 2 groups. We also provided a short

training session for all subjects. We applied the crossover technique. That is,

Group 1 performed tasks 1, 3, and 5 using SYMake and other tasks without SYMake.

The opposite is for Group 2.

To view the tasks assigned, please visit [Controlled

Experiement Tasks Section]

Controlled Experiment Results:

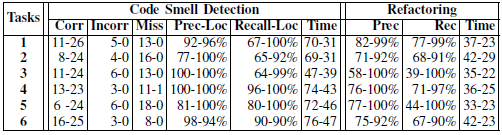

Tool’s usefulness is measured via code quality and developer’s effort. For

quality, in the detection tasks, we compared the numbers of smells

correctly/incorrectly detected and missed without using tool or using it (two

numbers are side-by-side, Table III). Precision and

recall for location detection is in

Prec-Loc and Recall-Loc. In the renaming

tasks, we calculated the precision (Prec) and recall (Rec)

of renaming locations. Developer effort is measured via finishing time. If the

time limit was passed, (s)he was required to stop.

Table III: Controlled Experiment's Result

- For a detailed result for each of the subject, you can download their

individual results. [All-Results.zip 95KB]

- For a detailed aggregated results from all subjects, you can download those

results. [ControlledExperiment-Results.xlsx

22KB]